Related Content

Online Hate and Harassment The American Experience 2020

Executive Summary

Since its inception, social media has played a key role in shaping social, cultural and political developments. This year in particular has seen a tectonic shift in the way communities across the world integrate digital and social networks into their daily lives. The novel coronavirus (and the disease it causes, COVID-19) has spread aggressively, claiming thousands of lives in the United States[i], devastating marginalized populations in major cities, crippling employment and economic opportunities for millions, and forcing a large portion of the global population to work from home in a digital environment. And as our world continues to be redefined through digital services and online discourse, the American public has become increasingly aware of and exposed to online hate and harassment. The Asian, Jewish, Muslim, and immigrant communities in particular are experiencing an onslaught of targeted hate, fueled by antisemitic conspiracy theories, anti-Asian bigotry, and Islamophobia surrounding the novel coronavirus[ii][iii][iv].

The survey underpinning this report predates the digital reality shaped by the coronavirus pandemic. And while ADL has tracked a surge in hateful conspiracy theory content related to the virus across social media platforms, the online hate ecosystem was thriving well before it. ADL’s first survey report on the scope of online hate published in 2019[v] revealed that exposure to toxic content had reached unprecedented levels. Yet, the increasing reliance on digital engagement in all spheres of life brought about by the virus will undoubtedly create new opportunities for exploitation by those seeking to harm others using digital services and tools. And the shift to work-from-home models for technology companies has disrupted the content moderation and safety operations of major social media platforms in significant ways as companies have moved towards a greater reliance on Artificial Intelligence (AI) systems to combat online hate and harassment.[vi] The findings of this report have historic significance -- they reflect a flashpoint in the online landscape at the cusp of a global pandemic. Subsequent ADL surveys on this topic will be compared to these findings as a reference point for estimating the impact that greater reliance on AI has had on moderating speech on the internet.

Key Findings

This report is based on a nationally representative survey of Americans conducted from January 17, 2020 to January 30, 2020, with 1,974 respondents, and sheds light on the trends in the online hate and harassment ecosystems. The report is an annual follow-up to our first report on this topic, titled “Online Hate and Harassment: The American Experience,”[vii] and provides us with the opportunity to compare the average online experience of Americans across years.

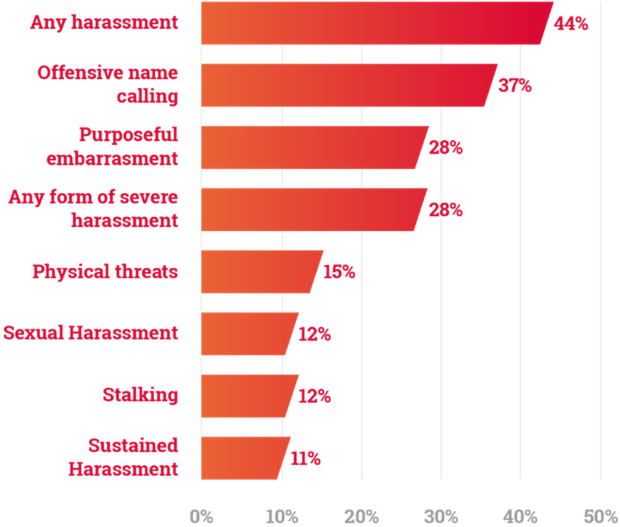

This nationally representative survey finds that harassment is a common aspect of many Americans’ lives, and appears to be changing dramatically as minority groups perceived higher levels of targeting as a result of their protected characteristics. This year, 44% of Americans who responded to our survey said that they experienced online harassment. This statistic is lower than the 53% reported in last year’s Online Hate and Harassment report. Notably, however, the incidence of perceived identity-based harassment has increased, and increased significantly in some cases. So, while it may be “safer” to live online in general this year as compared to last, ultimately, it is harder and less safe to be online as a member of a marginalized group. On the whole, online harassment related to a target’s identity-based characteristics[viii] increased from 32% to 35%. Specifically, LGBTQ+ individuals, Muslims, Hispanics or Latinos, and African-Americans faced especially high rates of identity-based discrimination. And respondents reported a doubling of religion-based harassment, from 11% to 22%, and race-based harassment increased from 15% to 25%.

Equally worrying is that 28% of respondents experienced severe online harassment, which includes sexual harassment, stalking, physical threats, swatting, doxing and sustained harassment. This is down from the 37% reported in last year’s survey.

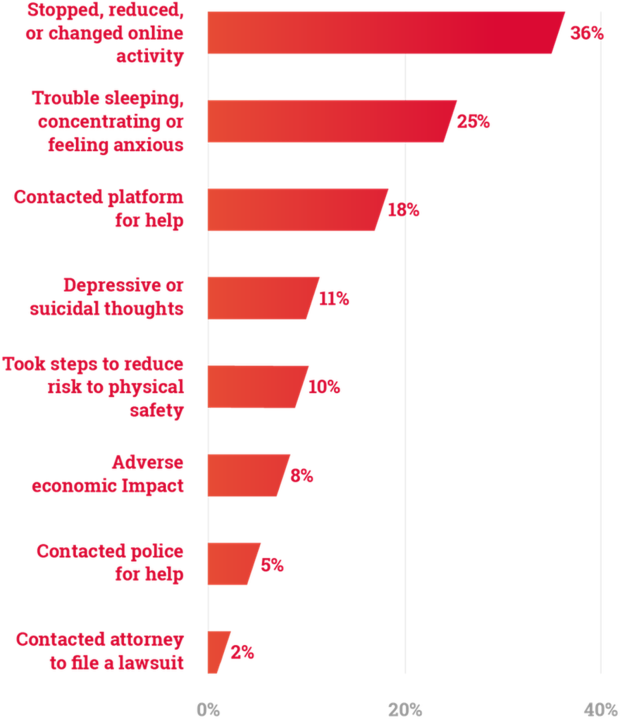

Online harassment impacts the target in a variety of ways. The most common response is to stop, reduce or change online behavior, which 36% of those who have been harassed have done. This can include steps like posting less often, avoiding certain sites, changing privacy settings, deleting apps, or increasing filtering of content or users. Many go further, with 18% of harassment targets contacting the technology platform to ask for help or report harassing content. In some cases, these behaviors were coupled with other impacts including thoughts of depression and suicide, anxiety, and economic impact.

Americans overwhelmingly want to see concrete steps taken to address online hate and harassment. The survey shows that across political ideologies, the vast majority of Americans believe that private technology companies and the government need to take action against online hate and harassment.

In fact, 87.5% of Americans somewhat or strongly agree that the government should strengthen laws and improve training and resources for police on online hate and harassment.

Americans also want platforms to take more action to counter or mitigate the problem. Seventy-seven percent of Americans want companies to make it easier to report hateful content and behavior. In addition, an overwhelming percentage of survey respondents (80%) want companies to label comments and posts that appear to come from automated “bots” rather than people.

Methodology

A survey of 1,974 individuals was conducted on behalf of ADL by YouGov, a leading public opinion and data analytics firm, examining Americans’ experiences with and views of online hate and harassment. Eight hundred and seventy-six surveys were collected to form a nationally representative base of respondents with additional oversamples from individuals who identified as Jewish, Muslim, African-American, Asian-American, Hispanic or Latino, or LGBTQ+. For the oversampled target groups, responses were collected until at least 200 Americans were represented from each of those groups. We oversampled the Jewish population until 500 Jewish Americans responded. Data was weighted on the basis of age, gender identity, race, census region and education to adjust for national representation. YouGov surveys are taken independently online by a prescreened set of panelists representing many demographic categories. Panelists are weighted for statistical relevance to national demographics. Participants are rewarded for general participation in YouGov surveys but were not directly rewarded by ADL for their participation in this survey. Surveys were conducted from January 17, 2020 to January 30, 2020. The margin of sampling error for the full sample of respondents is plus or minus 3 percentage points.

Results

Prevalence and Nature of Online Hate and Harassment

A little less than half (44%) of Americans experienced some type of online harassment. This is lower than the 53% reported in last year’s report but higher than the 41% reported to a comparable question asked in 2017 by the Pew Research Center. The most prevalent forms of harassment were generally isolated incidents: some 37% of Americans were subjected to offensive name calling and 28% had someone try to purposely embarrass them. More severe forms of harassment were also commonly experienced — with 28% of American adults reporting such an experience, down from 36% from last year’s report but up from the 18% reported in a Pew study from 2017. We defined “severe harassment” consistent with Pew Research Center as including physical threats, sexual harassment, stalking and sustained harassment. Of the respondents who contacted the police, 57% stated that they were given meaningful tools to protect themselves, and only 32% stated that the police followed up after the initial interaction.

Fifteen percent of respondents reported being subjected to physical threats online and 12% experienced sexual harassment and stalking, while 11% experienced sustained harassment. On a follow-up question for those who had experienced physical threats, 38% of respondents stated that the platform did not take any action on the threatening post, while 33% did not flag the post in question. This raises questions about why targets of online harassment might not choose to use reporting features on social media platforms, even when faced with threats of physical violence.

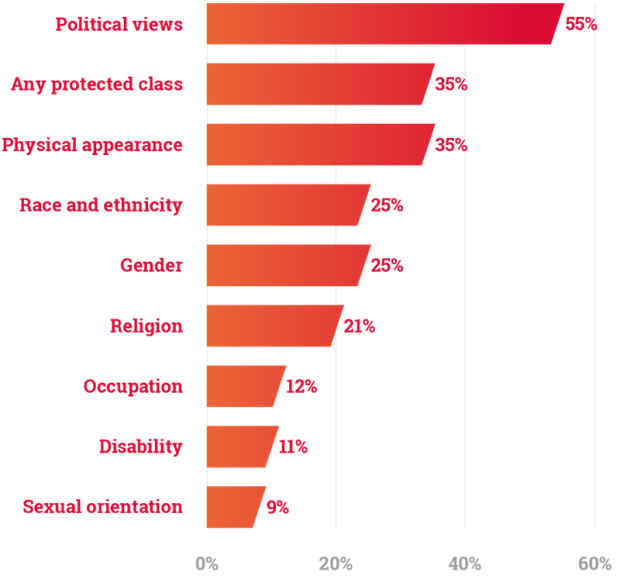

Online harassment can occur for a variety of reasons, and the survey asked specifically about perceived causes. Over one-third (35%) of Americans who had been harassed reported they felt the harassment was a result of their sexual orientation, religion, race or ethnicity, gender identity, or disability. Around one-in-five respondents who had experienced online harassment believed it was because of their religion (21%), which is nearly twice the amount reported last year (11%). One-in-four (25%) respondents felt the focus of their online harassment experience was their gender and some 25% because of their race or ethnicity, up significantly from the 15% reported in last year’s report. Around one-in-ten had been targeted as a result of their sexual orientation (9%), occupation (12%), or disability (11%). One consequence of widespread online hate and harassment is that it leaves people worried about being targeted in the future: 26% of those who had previously experienced harassment and 11% of Americans who had not experienced harassment reported worrying about future harassment.

In addition, 55% of those who were harassed reported that their political views drove at least part of the harassment while 35% reported harassment due to their physical appearance, which is significantly higher than the 21% reported for both figures last year.

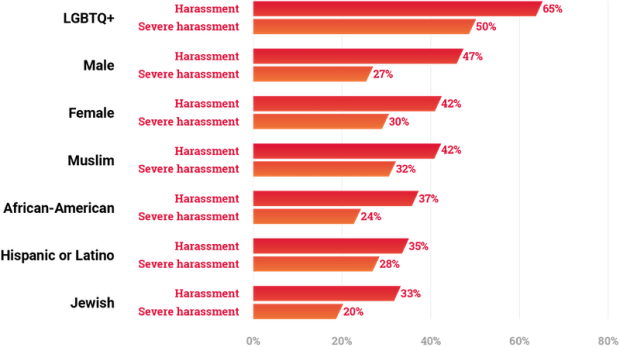

The survey results also shed light on the experience of online hate and harassment for specific identity-based groups. Sixty-five percent of LGBTQ+ respondents experienced online harassment, which is the highest overall incident of harassment for any group. This statistic dropped from the 76% of harassment borne out in the survey results from 2019 but remains incredibly concerning. LGBTQ+ respondents also experienced the highest levels of severe harassment with 50% of respondents reporting being targeted through stalking, sexual harassment, and physical threats.

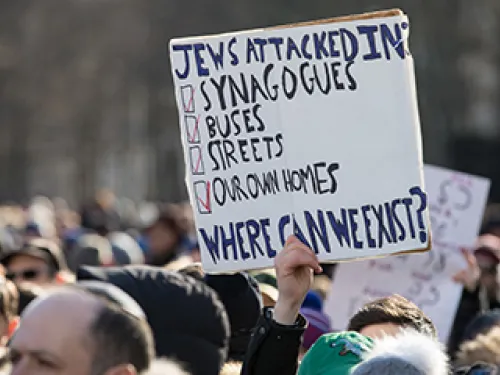

Similarly, while we see a drop in reported harassment figures across all groups, the reported harassment figures still remain especially high amongst religious and racial minorities. Thirty-three percent of Jewish respondents experienced online harassment, of which 20% experienced severe harassment. This is down from the 48% of harassment experienced by Jewish respondents in 2019. Muslims respondents also reported a drop in incidence of harassment, from 59% in 2019 to 42% in 2020, with 32% reporting severe harassment. More than one-third of African-American (37%) and Hispanic (35%) respondents also experienced online harassment. The overall harassment incidence for men and women remained similar to last year, with 42% of female-identifying respondents and 47% of male-identifying respondents having reported being the target of online harassment.

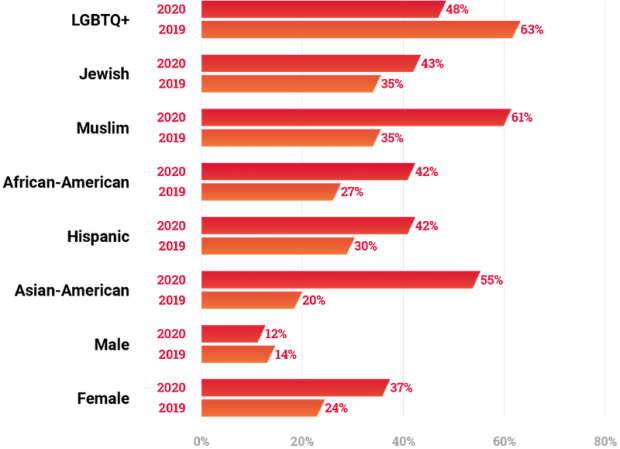

However, the composition of harassment changes when we look at identity-based groups according to respondents’ perceived reason for harassment. Identity-based harassment was most common against Muslim respondents, with 61% of Muslims who reported experiencing online harassment stating they felt they were targeted because of their religion, a significant increase from the 35% reported last year. Religious-based harassment showed a clear upward trend as 43% of Jewish respondents reported they felt they were targeted with hateful content because of their religion, up from 16% reported last year. While still high, fewer LGBTQ+ respondents reported harassment based on their sexual orientation (48%) compared to the 63% reported last year. Harassment was also common among other minority groups, with race-based harassment affecting 55% of Asian-Americans and 42% of Hispanic and African-American respondents. Finally, women also experienced harassment disproportionately, as 37% of female-identified respondents felt they were targeted because of their gender, significantly higher than reported from last year’s survey (24%), and higher than the 12% of gender-based harassment experienced by men.

This reveals a notable trend, that while overall harassment figures are apparently on the decline, the perceived targeting of specific identities and groups is on the rise.

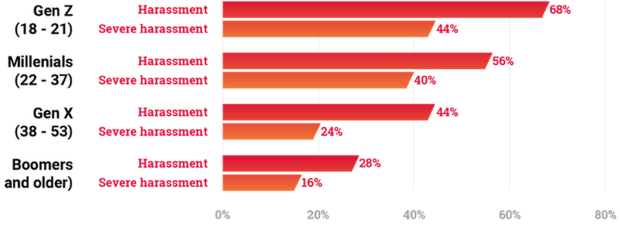

While online hate and harassment is prevalent across all age groups, younger Americans report higher rates than older Americans. The clear majority (68%) of 18–21 year olds (Gen Z) experienced some form of hate or harassment, with 44% reporting severe harassment. Additionally, 56% among 22–37 year olds (Millennials), and 44% of 38–53 year olds (Gen X) were targeted. For Americans 53 and over (Baby Boomers), 28% were targeted (16% severely).

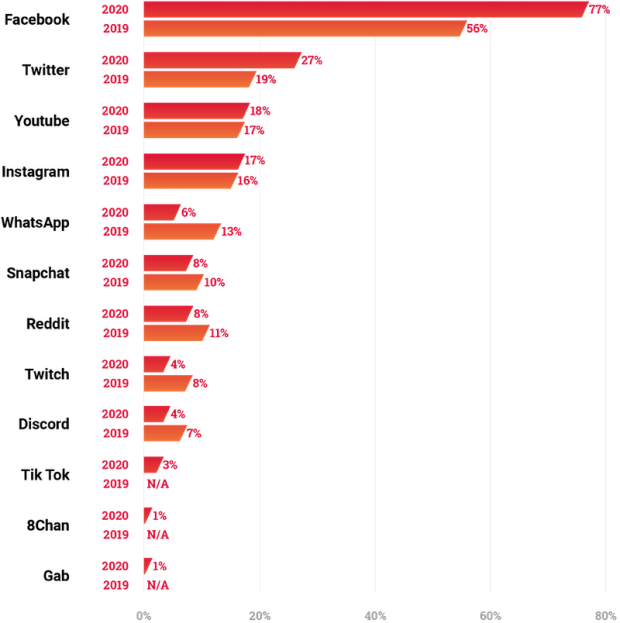

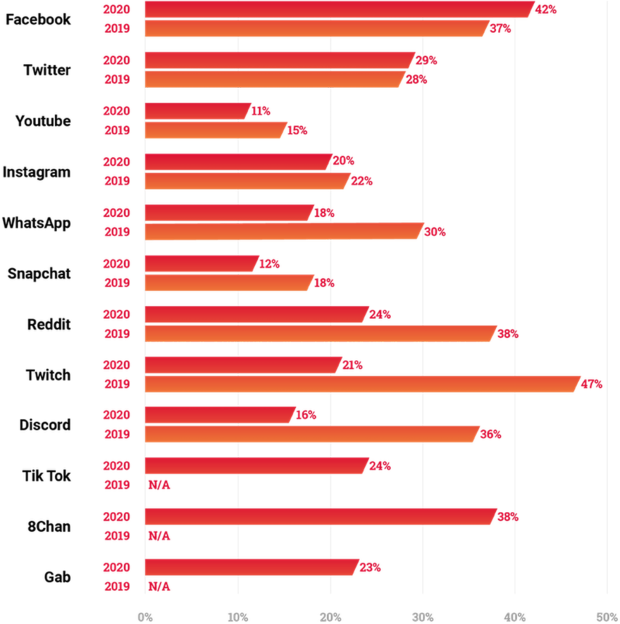

The survey also asked about where hate and harassment had occurred online. Of those respondents who were harassed online, more than three-quarters (77%) reported that at least some of their harassment occurred on Facebook. Smaller shares experienced harassment or hate on Twitter (27%), YouTube (18%), Instagram (17%), WhatsApp (6%), Reddit (8%), Snapchat (8%), Twitch (4%) and Discord (4%).

This analysis sheds light on the amount of online harassment occurring on platforms. In order to explore the rate of hate and harassment on each platform, the survey asked about the respondent’s use of different platforms. Graph 7 depicts the proportion of regular users (defined as using the platform at least once a day) who experienced harassment on that platform. The results suggest higher rates of harassment of regular users of Facebook, Gab, Twitter, Instagram, Whatsapp, and Twitch. Note that the results may underestimate the amount of harassment on the platforms because some targets may have since stopped using a platform for reasons either related or unrelated to the harassment.

Impact of Online Harassment

Many respondents who were targeted or fear being targeted with hateful or harassing content were impacted in terms of their economic, emotional, physical and psychological well-being. Twenty-five percent of respondents had trouble sleeping, concentrating, or felt anxious, while 11% had depressive or suicidal thoughts as a result of their experience with online hate. Eight percent of respondents reported to have experienced adverse economic impact as a result of online harassment. Some 36% stopped, reduced or changed their activities online, such as posting less often, avoiding certain sites, changing privacy settings, deleting apps, or increasing filtering of content or users. Ten percent took steps to reduce risk to their physical safety, such as moving locations, changing their commute, taking a self-defense class, avoiding being alone, or avoiding certain locations. Some respondents attempted to get help, either from companies or law enforcement: 18% contacted the platform, 5% contacted the police to ask for help or report online hate or harassment, and only 2% contacted an attorney or filed a lawsuit.

Actions to Address Online Hate and Harassment

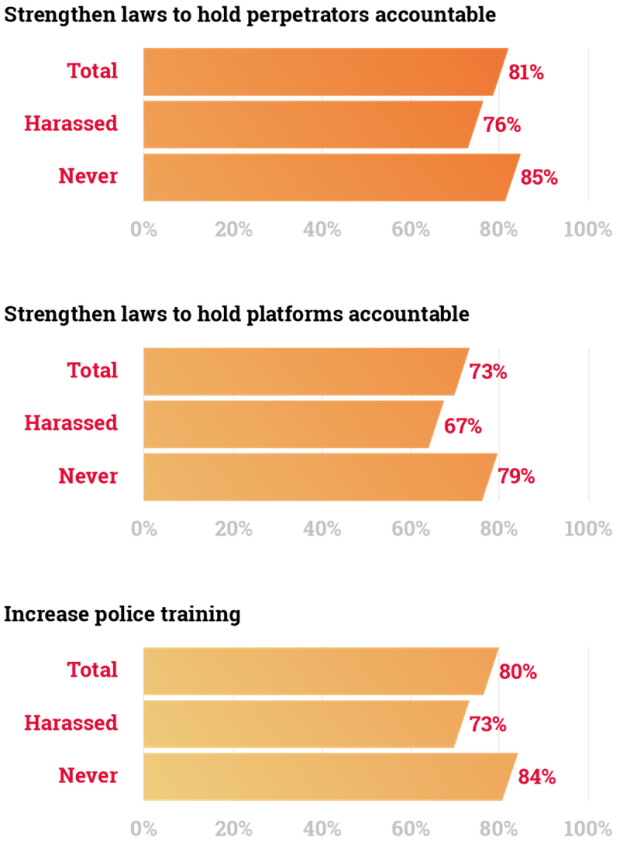

Americans overwhelmingly want platforms, law enforcement agencies and policymakers to address the problem of online hate and harassment. Over 87% of Americans want the government to act by strengthening laws and improving training and resources for police on responding to online hate and harassment, which is up from the 80% that felt similarly from last year’s report. And 81% strongly agree or somewhat agree that there should be laws to hold perpetrators of online hate accountable for their conduct.

Strong support exists for these changes regardless of whether an individual has previously experienced online hate and harassment. Those who were targeted held similar views to those who had not experienced online harassment.

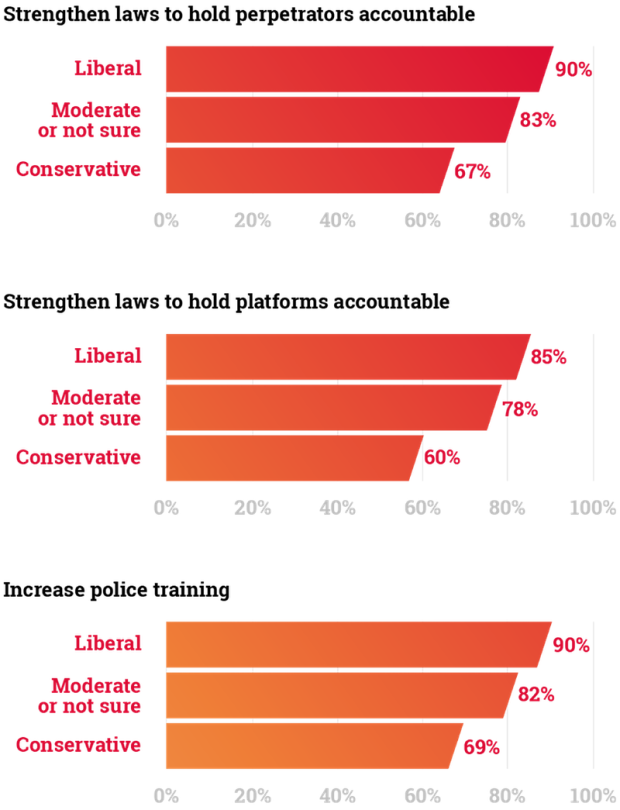

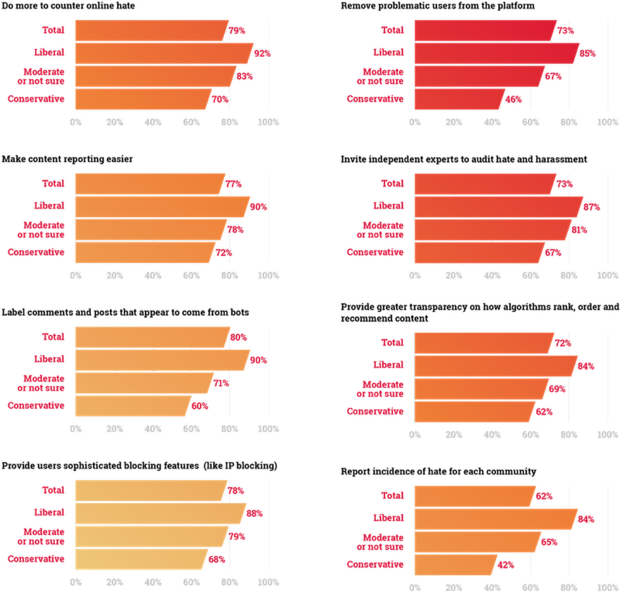

Support for these recommendations came from respondents across the political spectrum.

Americans also want to see private technology companies take action to counter or mitigate online hate and harassment, with 79% of respondents saying they strongly agree or somewhat agree with the statement that platforms should do more to counter online hate. They want platforms to make it easier for users to report (77%) hateful and harassing content. In addition, 80% of Americans want companies to label comments and posts that appear to come from automated “bots” rather than people. An overwhelming majority of respondents (78%) also want platforms to give users more control over their online space by providing more sophisticated blocking features such as IP blocking. Finally, a large percentage of respondents (73%) were in favor of platforms removing problematic users as well as having outside experts independently assess the amount of hate on a platform. As with the government and societal recommendations, comparable support existed for these recommendations regardless of whether or not a respondent had previously experienced harassment.

There is also a significant interest in greater transparency from social media platforms. Seventy-two percent of respondents want platforms to provide greater transparency on how their algorithms order, rank, and recommend content, while 64% want platforms to report the incidence of hate for different communities in their transparency reports (for example, report the number of pieces of content removed for anti-LGBTQ+, antisemitism, anti-Muslim, anti-immigrant hate)

A significant amount of respondents also indicated an interest in the independent auditing of social media platforms. Sixty-two percent strongly agreed or somewhat agreed with the statement that platforms should have outside experts and academics independently report and research on the amount of hate on their platform.

As with the government and societal recommendations, support is strong for these recommendations across the political ideological spectrum.

Recommendations

This report’s findings show that the vast majority of the American public — across demographics, political ideology, and past experience with online harassment — want both government and private technology companies to take action against online hate and harassment.

For Social Media Companies

The survey results in this report highlight a consistent demand by users (79% of respondents) for social media companies to do more to counter online hate and harassment. An overwhelming majority of respondents also agree with recommendations for increased user control of their online space (78%), improved tools for reporting or flagging hateful content (77%), increased transparency (72%), and accountability in the form of an independent audit (62%).

Ensure strong policies against hate

Social media platforms should have better community guidelines or standards that comprehensively address hateful content and harassing behavior, and clearly define consequences for violations. Platforms should prohibit toxic content from being monetized to ensure their platform cannot be used to fund extremist individuals, groups and movements. They should also prohibit the sharing of links that take users to websites known for encouraging hate and harassment.

Strengthen enforcement of policies

Social media platforms should assume greater responsibility to enforce their policies and to do so accurately at scale. Platforms need to use a mix of human reviewers, AI, and an appeals process that all work together to enforce their policies. Optical character recognition tools can be used to automate the process of detecting hate symbols.

Designing to reduce influence and impact of hateful content

Social media companies should redesign their platforms and adjust their algorithms to reduce the influence of hateful content and harassing behavior. Borderline content shared by users who have been flagged multiple times should not appear on news feeds and home pages.

Expand tools and services for targets

Given the prevalence of online hate and harassment, platforms should offer far more services and tools for individuals facing or fearing online attack. Platforms should develop an interstitial notification for content that AI detects has obvious hate speech between the moment someone posts content and the moment that it gets posted publicly. Users should also be allowed to flag multiple pieces of content within one report instead of having to create a new report for each piece of content being flagged.

Increase accountability and transparency

Platforms should adopt robust governance. This should include regularly scheduled external, independent audits so that the public knows the extent of hate and harassment on a given platform. Audits would also allow the public to verify that the company followed through on its stated actions and to assess the effectiveness of company efforts across time. Companies should provide information from the audit and elsewhere through more robust transparency reports. Transparency reports should include data from user generated identity-based reporting. For example, knowing the scale and nature of antissemitic content on a given platform will be useful to researchers and practitioners developing solutions to these problems.

For Government

A large portion of survey respondents agree that the government has a role to play in efforts aimed at reducing online hate and harassment. Over 87% of Americans want the government to act by strengthening laws and improving training and resources for police on online hate and harassment, which is up from the 80% that felt similarly in last year’s report. And an overwhelming majority of respondents agree that there should be laws to hold perpetrators of online hate accountable for their conduct (81%). Further, officials at all levels of government can use their bully pulpits to call for better enforcement of private technology companies’ own policies.

Government can address online hate and harassment through legislation, training, and research:

Strengthen laws against perpetrators of online hate

Hate and harassment exist both on the ground and online spaces but our laws have not kept up. Many forms of severe online misconduct are not consistently or adequately covered by cybercrime, harassment, stalking and hate crime laws currently on the books. State and federal lawmakers have an opportunity to lead the fight against online hate and harassment by increasing protections for targets as well as penalties for perpetrators of severe and abusive online misconduct. Some actions lawmakers can take include legislating to make sure constitutional and comprehensive laws cover cyber crimes such as doxing, swatting, cyberstalking, cyberharassment, non-consensual distribution of intimate imagery, video-teleconferencing and unlawful and deceptive synthetic media (sometimes called “deep fakes”) .

Improve training of law enforcement

Law enforcement is a key responder to online hate and harassment, especially in cases when users feel they are in imminent danger. Increasing training and resources for agencies is critical to ensure law enforcement personnel can better support targets when they are contacted by law enforcement responders. Additionally, better training and resources can support better and more effective investigations and prosecutions for these types of cases.

Review the tools and services platforms provide to their users to mitigate online hate

Users, especially those that have been or are likely to be targeted, rely on platforms to provide them with tools and services to prevent and defend themselves from online hate and harassment. Congress should commission research that provides a summary of the available tools provided by platforms to their users to protect and defend themselves. The review process should also include a needs assessment of users and a gap analysis of available tools and services.

Officials can also use the bullipulit to encourage social media platforms to be more responsive to civil society’s concerns:

Strong Community Guidelines

Every social media and online game platform must have clear community guidelines that address hateful content and harassing behavior, and clearly define consequences for violations. As the purveyors of hate evolve and use new methods to spread and increase harm to their targets, the Community Guidelines and enforcement need to evolve. Policies and guidelines are only as good as their enforcement and platforms must vigorously and effectively enforce its policies against egregiously hateful and harmful content.

Independent and Verifiable Audits Around Antisemitism and Other Forms of Hate

Social media and online game platforms should adopt robust governance. This should include regularly scheduled external, independent audits so that the public knows the extent of hate and harassment on a given platform. Audits should also allow the public to verify that the company followed through on its stated actions and assess the effectiveness of company efforts over time

Demonetize Hate

Government should explore the numerous avenues that hate groups and extremists use to fundraise online. Online hate has been monetized by users on platforms and through services that facilitate payments. This creates perverse incentives for content creators on topics that attract users interested in hateful ideologies and conspiracy theories, like Holocaust denial. The most significant new type of funding for the white supremacist movement has been crowdfunding or crowdsourcing, which can be used by both individuals and groups. White supremacist organizations have also been known to generate advertising revenues through their websites in sophisticated ways. These methods include using advertisement services like Google Adsense or Doubleclick that automate the process of placing an advertisement on a website without the website owner and advertising company having to interact, and without the advertising company having to explicitly opt in for its ads to be placed on any website.[ix]

End Notes

[i] https://www.cdc.gov/coronavirus/2019-ncov/cases-updates/cases-in-us.html

[ii] Extremists Use Coronavirus to Advance Racist ... (n.d.). Retrieved May 26, 2020, from https://www.adl.org/blog/extremists-use-coronavirus-to-advance-racist-conspiratorial-agendas

[iii] Islamophobes React to Coronavirus Pandemic with Anti-Muslim Bigotry. (2020, April 30). Retrieved May 26, 2020, from https://www.adl.org/blog/islamophobes-react-to-coronavirus-pandemic-with-anti-muslim-bigotry

[iv] Coronavirus Crisis Elevates Antisemitic, Racist Tropes. (2020, March 17). Retrieved May 26, 2020, from https://www.adl.org/blog/coronavirus-crisis-elevates-antisemitic-racist-tropes

[v] https://www.adl.org/onlineharassment

[vi] Matsakis, L. (2020). Coronavirus Disrupts Social Media’s First Line of Defense. Retrieved 11 May 2020, from https://www.wired.com/story/coronavirus-social-media-automated-content-moderation/

[vii] Based on an ADL survey conducted from December 17, 2018 to December 27, 2018

[viii] Includes race or ethnicity, religion, gender identity, sexual orientation or disability

[ix] How Anti-Semitic And Holocaust-Denying Websites Are Using ... (n.d.). Retrieved May 27, 2020, from https://www.mediamatters.org/google/how-anti-semitic-and-holocaust-denying-websites-are-using-google-adsense-revenue