Related Resources

Hate spreads on social media platforms through influential accounts that act as nodes in broader networks and exploit inadequate policies. Twitter’s decision in November 2022 to reinstate previously banned, influential accounts with a history of hate presented an opportunity to observe and record this networked relationship.

The ADL Center for Technology & Society (CTS) monitored 65 reinstated accounts and analyzed their activity for violations of Twitter’s hateful conduct and coordinated harmful activity policies (see Methods). By focusing on reinstated accounts, CTS observed how their reply threads acted as social spaces where Twitter users connected through shared antisemitism and other hate. Although the reinstated accounts themselves rarely posted explicit antisemitic content, we found antisemitic tropes, veiled attacks, and conspiracy theories among their networks of followers.

CTS has previously written about the phenomenon of “stochastic harassment” on Twitter, or how influential accounts act as nodes for harassment even when not explicitly directing their followers to harass anyone. All social media platforms are vulnerable to stochastic hate and harassment, and none currently have effective policies for proactively addressing how hate and harassment are networked. Twitter is especially concerning given its outsized influence and history of abuse by extremists. To combat how influential nodes stoke hate that spreads through networks, we urge Twitter and all platforms to recognize the role of networks in amplifying hate, to consider contexts that stoke online hate and abuse, and to adopt policies that take the dangers of stochastic harassment and hate into account.

Nodes of antisemitism

Beginning in November 2022, Twitter announced it would grant “general amnesty” to suspended accounts that had not violated any laws. Twitter’s updated general amnesty policy set it apart from other mainstream social media platforms like Facebook and YouTube which have stricter rules about account reinstatement. We took advantage of the general amnesty decision to monitor high-profile reinstated accounts and their effects on networks of followers. Even though three of the 65 accounts in our sample were subsequently re-suspended, the networked relationship we observed and its role in spreading hate is still apparent in the others and for some accounts that were never suspended in the first place.

Whether the influential reinstated accounts on Twitter knowingly encouraged their networks of followers to spew hate, in practice their reply threads became magnets for vile antisemitic content.

CTS found 60 examples of direct replies to reinstated accounts and 127 examples of threaded replies (i.e., replies to tweets in the same conversation, but not directly replying to the reinstated account’s tweet) that our machine learning classifier assigned high antisemitism scores to during the period we collected data. These examples demonstrate how influential accounts can facilitate antisemitic conversations in their reply threads.

One of the high-profile reinstated accounts we analyzed belonged to Ali Alexander, lead organizer of the Stop the Steal campaign and promoter of Ye’s (Kanye West) 2024 presidential campaign, which has been rife with antisemitism. (Twitter suspended Alexander again after reports surfaced that he had solicited nude photographs from a minor using direct messages, not for antisemitic content.) In one example, Alexander quote-tweeted ADL on March 7, 2023, and deliberately took ADL’s own words out of context, which provoked antisemitic responses:

(Screenshot taken on March 15, 2023.)

Alexander’s followers took his spotlighting of ADL as an opportunity to build a reply thread featuring overt antisemitism.

(Screenshots taken on March 15, 2023.)

Neither of these replies violates Twitter’s hateful conduct policy. Yet the list of names with Israeli flags is a clear example of antisemitic tropes alleging Jewish control of business and government and Jewish-Americans’ “dual loyalty.” (None of the individuals on the list are Israeli.) And antisemites online use the phrase “the nose knows”—referencing stereotypes about Jewish anatomy—as a subtle way to signal hate without using obvious slurs or making direct threats of violence.

The accounts that posted these replies tweeted other antisemitic content from their own accounts, along with conspiracy theories such as COVID-19 denial and the moon landing being fake. CTS collected over 5,000 examples from February 2023 of virulent antisemitism from 2,173 accounts following the reinstated accounts in our sample. Some familiar antisemitic tropes and narratives were prevalent in their tweets:

Conspiracy theories about George Soros and the Rothschild banking family controlling global politics, finance, and media. Soros in particular was the target of antisemitic vitriol from accounts linking his support of reformist district attorneys to an alleged plot to destroy American cities. Soros’s absence from the World Economic Forum (WEF) meeting due to a scheduling conflict—which occurred during the period we analyzed—also loomed large given that the WEF is a frequent topic in antisemitic conspiracy theories as well. While not every mention or critique of Soros is necessarily antisemitic, there were hundreds of examples in our data set that were.

(Screenshot taken on March 15, 2023.)

Accusations that Khazarians, and “fake Jews” are responsible for the war in Ukraine and belong to a “cabal” that secretly runs the world and plans to bring about a one world government.

Beliefs about Jews pushing a radical agenda to destroy “the West” by promoting transgender identities and lifestyles and “replacing” white people via immigration (e.g., the Great Replacement).

Characterizing Jews as inhuman “occupiers” in countries that are not theirs. Twitter includes “dehumanizing or degrading… for example, comparing a group of people to animals or viruses” as an option when reporting identity-based attacks.

(Screenshot taken on March 15, 2023.)

Several examples of accounts encouraging others to “do your own research,” or seek out fringe or unverified information sources, such as directions to “Google ‘dancing Israelis’” and links to antisemitic content on Rumble, BitChute, YouTube, and conspiracy theory websites.

Social media researchers have noted how extremists often use suggestions and references like these as methods of “redpilling,” slowly introducing tropes to bring about a radical political awakening. The ADL Center for Antisemitism Research recently found that individuals who agree with antisemitic tropes are far more likely to believe conspiracy theories.

Content moderation policies fail to address networked hate

A single tweet may not violate Twitter’s rules, but these accounts and threads showed clear patterns of antisemitism. Our findings—coupled with our previous research on how powerful influencers inspire harassment indirectly—demonstrate that Twitter’s content moderation policies are inadequate to mitigate online hate and harassment. By focusing solely on individual accounts and using a narrow definition of hateful conduct as “threats or incitement of violence,” Twitter misses how hate builds over time through networks even when it is not explicitly coordinated. Twitter did eventually take action and suspend three of the accounts we monitored. Yet each of those suspensions focused on individual actions—and in the case of Ali Alexander, had nothing to do with his role in facilitating antisemitism—and did not consider the network problem. Suspending these specific accounts removed their nodes, but the networks persist and are active in other influencers’ reply threads.

Our sample was relatively small, but the evidence warrants more research about the full scale of stochastic hate and its potential to cause harm. When antisemitism and other forms of hate go unchecked, they normalize beliefs and actions informed by a hate-based worldview. Any social media company serious about tackling online hate must apply its policies to entire reply threads to consider the context of hateful posts that use innuendo and shared references to skirt their rules.

Recommendations

To combat the normalization and spread of hateful ideologies, social media companies must consider the dangers of stochastic hate and harassment and create policies and enforcement to address accounts that are nexuses of bigotry and hate.

To address these issues, we urge platforms to:

Understand stochastic harassment and implement policies to combat it.

While the threat of stochastic harassment and its harmful impacts are well-documented, social media platforms seldom consider it. Platforms must look at the role of users, especially influential ones, in furthering stochastic harassment and implement policies that prohibit it.

Proactively review threads where hateful comments are flagged instead of limiting review to individual comments and replies.

Hateful comments often aggregate in threads and groups with people who espouse hateful ideologies; one hateful comment on a thread often suggests that others may exist there, too. Online hate proliferates quickly—one comment can spark a network to react and amplify hate. Platforms should be mindful of how hate accumulates so they can develop more comprehensive approaches for dealing with harmful content.

Invest in technology that can detect coded language effectively, and train human moderators to spot such content.

Coded language is when people use words that on the surface seem neutral, but are intended to mean something else (for example, using “urban” as a way to refer to Black people, or “globalist” to refer to Jewish people).

Coded language can be difficult to track because it changes frequently. Platforms must invest in stronger machine learning algorithms and natural language processing (NLP) models to identify patterns, misspellings, and symbols used to violate content policies.

But automation may not always perceive the context and intent behind an expression or symbol, so platforms should continue to have human moderators with the training to spot possible coded and assess whether it is hiding a user’s hateful intent. Civil society organizations have expert knowledge about the contexts of hate and can be valuable partners in these endeavors.

Implement reporting options that allow users to flag coded language, veiled incitement, and nodes of hate as they come across them.

Platforms should allow users to report an entire thread instead of a single reply or comment. In addition, platforms may dedicate a drop-down option in their reporting options to incidents of coded language used to mask malicious intent. These features should be easy to find and accessible.

Methods

CTS created our list of 65 reinstated accounts by:

Checking Twitter for announcements about reinstatement, either from the accounts themselves or from observers.

Cross-referencing with the accounts listed in this Wikipedia article about notable suspensions.

Consulting with our colleagues in the ADL Center on Extremism. Our list is nonpartisan and includes left- and right-wing figures.

Using Twitter’s open API, we captured tweets from each of the 65 accounts as they were posted between February 1 and March 8, 2023. Once per week, we processed those tweets through our own Online Hate Index (OHI) antisemitism classifier and Jigsaw’s Perspective model for toxicity and identity-based attacks. We then manually reviewed all of the tweets that scored above a .9 threshold for each tool (the highest possible score for both the OHI and Perspective is 1.0, indicating the highest level of confidence that the content is antisemitic, toxic, or an identity-based attack).

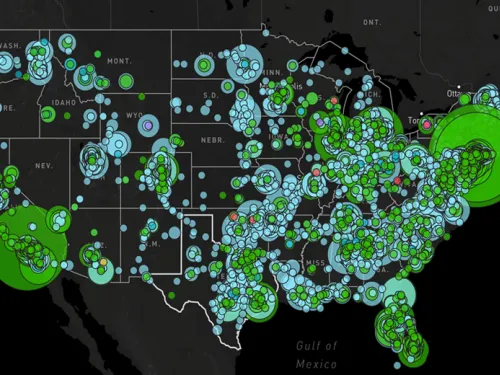

Additionally, we pulled tweets from all of the accounts that followed the reinstated accounts in our sample. We looked at both tweets and replies for all of the accounts in this broader network and processed them in the same manner using the OHI and Jigsaw’s Perspective models.